People get very attached to the way a product they use currently works and feels. If someone proposes a change to the status quo, there will be pushback, even if the proposal would bring significant improvements. So, when JetBrains announced they were working on a new UI which “reduces visual complexity, provides easy access to […]

Actix web describes itself as a small, pragmatic, and extremely fast rust web framework. The README has an example to start with so let’s create a new Rust project. $ cargo new web_app Created binary (application) `web_app` package $ cd web_app First we need these dependencies in our Cargo.toml: [dependencies] actix-web = “2” actix-rt = […]

In Rust, there are two types of references: a shared reference and a mutable reference. A reference is denoted by &. A mutable reference is denoted as &mut. The docs tell us that a ‘reference lets you refer to a value without taking ownership of it.’ What does that mean? What is ownership? Let’s look […]

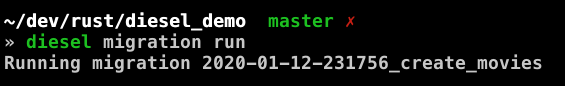

Diesel describes itself as the most productive way to interact with databases in Rust because of its safe and composable abstractions over queries. http://diesel.rs/ To try it out, create a new project using Cargo Then edit the Cargo.toml file to add diesel and dotenv dependencies Next you’ll need to install the standalone diesel CLI. In […]

In the beginning, there were physical machines. Like, actual computers sitting somewhere in your office that you had to configure, maintain, etc. You used them to serve your website or web service to people. Then there were off-premise managed hosts, I guess. They’re your computers (or at least for the time you’re paying for them), but […]

In this post (series?), I’d like explore how to write a program in a type safe and testable way. I’m using Scala because it’s the language I’m most proficient in; this would be prettier and less boilerplatey in Haskell. The basic point that I hope to get across in this post (and the potential follow-ups) […]

I’ve been messing around with Scala a lot recently and I think the language hits such a sweet spot. Scala is a multi-paradigm language which means if you want to use an exclusively procedural/imperative/functional style or a mix of the three then you can. As Martin Odersky (the creator of the language) mentions in his […]

In order to use textured pattern images as backgrounds for Layouts and Views in Android, it’s not enough to simply crop out a part of the image and run it through the Draw 9-Patch tool. Similarly, if you simply set the background resource/drawable to your image, you’ll find that it won’t look right. You’ll get […]

What’s a Looper, what’s a Handler and what’s up with “Can’t create handler inside thread that has not called Looper.prepare()” NOTE: as always, consider everything I say as prefaced with “my understanding is that…” A Looper is a simple message loop for a thread. Once loop() is called, an unending loop (literally while (true)) waits […]

The topic of Context in Android seems to be confusing too many. People just know that Context is needed quite often to do basic things in Android. People sometimes panic because they try to do perform some operation that requires the Context and they don’t know how to “get” the right Context. I’m going to […]